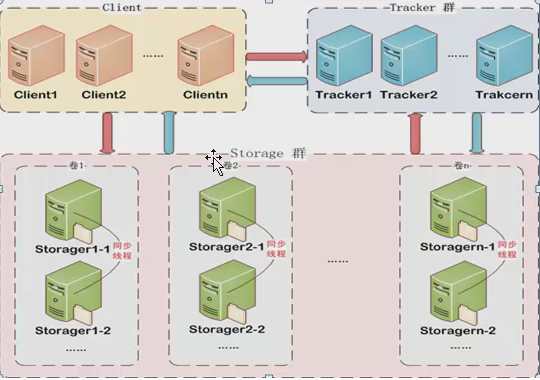

Fastdfs是一个开源的轻量级分布式文件系统,只能通过专有的api访问(C,

java,php), 主要解决了海量数据存储问题,特别适合以文件为主体的在线服务,如相册的网站,视频网站,听书,组成部分:1.由跟踪服务器(tracker server),2.存储服务器(storage server)3.客户端(client)三部分组成,应用场景特别适合中小文件(>4kb <500MB)

Tracker server作用

负责保存storager的元信息,以及调度的工作,在访问上起到了负载均衡的作用,在内存中记录集群group和storage的状态信息,Tracker Server的性能非常高,一个大的集群(比如上百个group),一般俩台就够了

storage server作用

数据存储位置,存储的时候可以分成多个group,会按照原来的文件方式存储,只不过追踪服务器会把文件名重命名,每个group里面的数据都是相同的,相当于read1,各个group是不通信的,追踪服务器可以起多个

环境准备:

| 主机名 | IP |

| tracker-svr01 | 172.16.1.180 |

| tracker-svr02 | 172.16.1.181 |

| storage01 | 172.16.1.190 |

| storage02 | 172.16.1.191 |

| storage03 | 172.16.1.192 |

公共部份

安装基础环境:

所有服务器同步时间,可以加入定时任务,每几分钟一次,fastdfs依赖时间,不一致可能会导致不可预料的问题。

yum -y install zlib zlib-devel pcre pcre-devel gcc gcc-c++ openssl openssl-devel libevent libevent-devel perl unzip net-tools lsof tree wget vim systemctl stop firewalld #关闭防火墙,Selinux,同步时间 tar zxf V1.0.36.tar.gz cd libfastcommon-1.0.36 ./make.sh ./make.sh install

tracker服务器部份

编译安装fastDFS tracker节点

tar zxf V5.08.tar.gz cd fastdfs-5.08/ ./make.sh ./make.sh install

准备tracker服务器配置文件(所有的tracker服务器都执行)

cp tracker.conf.sample tracker.conf

修改配置文件

[root@tracker-svr02 fdfs]# cat /etc/fdfs/tracker.conf # is this config file disabled # false for enabled # true for disabled # 重要 disabled=false # bind an address of this host # empty for bind all addresses of this host #重要,绑定的IP bind_addr= # the tracker server port # 重要 port=22122 # connect timeout in seconds # default value is 30s # 重要 connect_timeout=30 # network timeout in seconds # default value is 30s # 重要 network_timeout=60 # the base path to store data and log files # 数据目录,非客户端文件存放目录,重要 base_path=/data/tracker/base # max concurrent connections this server supported # 重要,必须改,依赖ulimit max_connections=256 # accept thread count # default value is 1 # since V4.07 accept_threads=1 # work thread count, should <= max_connections # default value is 4 # since V2.00 work_threads=4 # the method of selecting group to upload files # 0: round robin # 1: specify group # 2: load balance, select the max free space group to upload file store_lookup=2 # which group to upload file # when store_lookup set to 1, must set store_group to the group name store_group=group2 # which storage server to upload file # 0: round robin (default) # 1: the first server order by ip address # 2: the first server order by priority (the minimal) store_server=0 # which path(means disk or mount point) of the storage server to upload file # 0: round robin # 2: load balance, select the max free space path to upload file store_path=0 # which storage server to download file # 0: round robin (default) # 1: the source storage server which the current file uploaded to download_server=0 # reserved storage space for system or other applications. # if the free(available) space of any stoarge server in # a group <= reserved_storage_space, # no file can be uploaded to this group. # bytes unit can be one of follows: ### G or g for gigabyte(GB) ### M or m for megabyte(MB) ### K or k for kilobyte(KB) ### no unit for byte(B) ### XX.XX% as ratio such as reserved_storage_space = 10% reserved_storage_space = 10% #standard log level as syslog, case insensitive, value list: ### emerg for emergency ### alert ### crit for critical ### error ### warn for warning ### notice ### info ### debug log_level=info #unix group name to run this program, #not set (empty) means run by the group of current user run_by_group= #unix username to run this program, #not set (empty) means run by current user run_by_user= # allow_hosts can ocur more than once, host can be hostname or ip address, # "*" (only one asterisk) means match all ip addresses # we can use CIDR ips like 192.168.5.64/26 # and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com # for example: # allow_hosts=10.0.1.[1-15,20] # allow_hosts=host[01-08,20-25].domain.com # allow_hosts=192.168.5.64/26 allow_hosts=* # sync log buff to disk every interval seconds # default value is 10 seconds sync_log_buff_interval = 10 # check storage server alive interval seconds check_active_interval = 120 # thread stack size, should >= 64KB # default value is 64KB thread_stack_size = 64KB # auto adjust when the ip address of the storage server changed # default value is true storage_ip_changed_auto_adjust = true # storage sync file max delay seconds # default value is 86400 seconds (one day) # since V2.00 storage_sync_file_max_delay = 86400 # the max time of storage sync a file # default value is 300 seconds # since V2.00 storage_sync_file_max_time = 300 # if use a trunk file to store several small files # default value is false # since V3.00 use_trunk_file = false # the min slot size, should <= 4KB # default value is 256 bytes # since V3.00 slot_min_size = 256 # the max slot size, should > slot_min_size # store the upload file to trunk file when it's size <= this value # default value is 16MB # since V3.00 slot_max_size = 16MB # the trunk file size, should >= 4MB # default value is 64MB # since V3.00 trunk_file_size = 64MB # if create trunk file advancely # default value is false # since V3.06 trunk_create_file_advance = false # the time base to create trunk file # the time format: HH:MM # default value is 02:00 # since V3.06 trunk_create_file_time_base = 02:00 # the interval of create trunk file, unit: second # default value is 38400 (one day) # since V3.06 trunk_create_file_interval = 86400 # the threshold to create trunk file # when the free trunk file size less than the threshold, will create # the trunk files # default value is 0 # since V3.06 trunk_create_file_space_threshold = 20G # if check trunk space occupying when loading trunk free spaces # the occupied spaces will be ignored # default value is false # since V3.09 # NOTICE: set this parameter to true will slow the loading of trunk spaces # when startup. you should set this parameter to true when neccessary. trunk_init_check_occupying = false # if ignore storage_trunk.dat, reload from trunk binlog # default value is false # since V3.10 # set to true once for version upgrade when your version less than V3.10 trunk_init_reload_from_binlog = false # the min interval for compressing the trunk binlog file # unit: second # default value is 0, 0 means never compress # FastDFS compress the trunk binlog when trunk init and trunk destroy # recommand to set this parameter to 86400 (one day) # since V5.01 trunk_compress_binlog_min_interval = 0 # if use storage ID instead of IP address # default value is false # since V4.00 use_storage_id = false # specify storage ids filename, can use relative or absolute path # since V4.00 storage_ids_filename = storage_ids.conf # id type of the storage server in the filename, values are: ## ip: the ip address of the storage server ## id: the server id of the storage server # this paramter is valid only when use_storage_id set to true # default value is ip # since V4.03 id_type_in_filename = ip # if store slave file use symbol link # default value is false # since V4.01 store_slave_file_use_link = false # if rotate the error log every day # default value is false # since V4.02 rotate_error_log = false # rotate error log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.02 error_log_rotate_time=00:00 # rotate error log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_error_log_size = 0 # keep days of the log files # 0 means do not delete old log files # default value is 0 log_file_keep_days = 0 # if use connection pool # default value is false # since V4.05 use_connection_pool = false # connections whose the idle time exceeds this time will be closed # unit: second # default value is 3600 # since V4.05 connection_pool_max_idle_time = 3600 # HTTP port on this tracker server http.server_port=8080 # check storage HTTP server alive interval seconds # <= 0 for never check # default value is 30 http.check_alive_interval=30 # check storage HTTP server alive type, values are: # tcp : connect to the storge server with HTTP port only, # do not request and get response # http: storage check alive url must return http status 200 # default value is tcp http.check_alive_type=tcp # check storage HTTP server alive uri/url # NOTE: storage embed HTTP server support uri: /status.html http.check_alive_uri=/status.html

最大连接数依赖于ulimit

[root@storage01 libfastcommon-1.0.36]# ulimit -n 65535

创建base_path指定的目录

[root@tracker-svr01 fdfs]# mkdir /data/tracker/base -p

启动tracker服务器

[root@tracker-svr01 fdfs]# systemctl start fdfs_trackerd [root@tracker-svr01 fdfs]# ps -ef|grep fdfs root 1909 1 0 00:47 ? 00:00:00 /usr/binfdfs_trackerd /etc/fdfs/tracker.conf [root@tracker-svr01 fdfs]# netstat -lntup|grep fdfs tcp 0 0 0.0.0.0:22122 0.0.0.0:* LISTEN 1909/fdfs_trackerd

storage节点部份(每个节点创建了两个组分别是ops和dev)

编译安装fastDFS storage节点

tar zxf V5.08.tar.gz cd fastdfs-5.08/ ./make.sh ./make.sh install

创建storage节点的配置文件

[root@storage01 libfastcommon-1.0.36]# cd /etc/fdfs/ [root@storage01 fdfs]# mkdir storage_conf [root@storage01 fdfs]# cp storage.conf.sample storage_conf/storage_dev.conf [root@storage01 fdfs]# cp storage.conf.sample storage_conf/storage_ops.conf [root@storage01 fdfs]# cd storage_conf/ [root@storage01 storage_conf]# ls storage_dev.conf storage_ops.conf

dev组配置文件

[root@storage01 storage_conf]# cat storage_dev.conf # is this config file disabled # false for enabled # true for disabled disabled=false # the name of the group this storage server belongs to # # comment or remove this item for fetching from tracker server, # in this case, use_storage_id must set to true in tracker.conf, # and storage_ids.conf must be configed correctly. # 组名,重要 group_name=dev # bind an address of this host # empty for bind all addresses of this host bind_addr= # if bind an address of this host when connect to other servers # (this storage server as a client) # true for binding the address configed by above parameter: "bind_addr" # false for binding any address of this host client_bind=true # the storage server port # 多个分组时端口不要重复 port=23000 # connect timeout in seconds # default value is 30s connect_timeout=30 # network timeout in seconds # default value is 30s network_timeout=60 # heart beat interval in seconds heart_beat_interval=30 # disk usage report interval in seconds stat_report_interval=60 # the base path to store data and log files base_path=/data/storage/dev/base # max concurrent connections the server supported # default value is 256 # more max_connections means more memory will be used max_connections=256 # the buff size to recv / send data # this parameter must more than 8KB # default value is 64KB # since V2.00 buff_size = 256KB # accept thread count # default value is 1 # since V4.07 accept_threads=1 # work thread count, should <= max_connections # work thread deal network io # default value is 4 # since V2.00 work_threads=4 # if disk read / write separated ## false for mixed read and write ## true for separated read and write # default value is true # since V2.00 disk_rw_separated = true # disk reader thread count per store base path # for mixed read / write, this parameter can be 0 # default value is 1 # since V2.00 disk_reader_threads = 1 # disk writer thread count per store base path # for mixed read / write, this parameter can be 0 # default value is 1 # since V2.00 disk_writer_threads = 1 # when no entry to sync, try read binlog again after X milliseconds # must > 0, default value is 200ms sync_wait_msec=50 # after sync a file, usleep milliseconds # 0 for sync successively (never call usleep) sync_interval=0 # storage sync start time of a day, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 sync_start_time=00:00 # storage sync end time of a day, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 sync_end_time=23:59 # write to the mark file after sync N files # default value is 500 write_mark_file_freq=500 # path(disk or mount point) count, default value is 1 store_path_count=1 # store_path#, based 0, if store_path0 not exists, it's value is base_path # the paths must be exist store_path0=/data/storage/dev/store #store_path1=/home/yuqing/fastdfs2 # subdir_count * subdir_count directories will be auto created under each # store_path (disk), value can be 1 to 256, default value is 256 subdir_count_per_path=256 # tracker_server can ocur more than once, and tracker_server format is # "host:port", host can be hostname or ip address # 重要 tracker_server=172.16.1.181:22122 tracker_server=172.16.1.182:22122 #standard log level as syslog, case insensitive, value list: ### emerg for emergency ### alert ### crit for critical ### error ### warn for warning ### notice ### info ### debug log_level=info #unix group name to run this program, #not set (empty) means run by the group of current user run_by_group= #unix username to run this program, #not set (empty) means run by current user run_by_user= # allow_hosts can ocur more than once, host can be hostname or ip address, # "*" (only one asterisk) means match all ip addresses # we can use CIDR ips like 192.168.5.64/26 # and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com # for example: # allow_hosts=10.0.1.[1-15,20] # allow_hosts=host[01-08,20-25].domain.com # allow_hosts=192.168.5.64/26 allow_hosts=* # the mode of the files distributed to the data path # 0: round robin(default) # 1: random, distributted by hash code file_distribute_path_mode=0 # valid when file_distribute_to_path is set to 0 (round robin), # when the written file count reaches this number, then rotate to next path # default value is 100 file_distribute_rotate_count=100 # call fsync to disk when write big file # 0: never call fsync # other: call fsync when written bytes >= this bytes # default value is 0 (never call fsync) fsync_after_written_bytes=0 # sync log buff to disk every interval seconds # must > 0, default value is 10 seconds sync_log_buff_interval=10 # sync binlog buff / cache to disk every interval seconds # default value is 60 seconds sync_binlog_buff_interval=10 # sync storage stat info to disk every interval seconds # default value is 300 seconds sync_stat_file_interval=300 # thread stack size, should >= 512KB # default value is 512KB thread_stack_size=512KB # the priority as a source server for uploading file. # the lower this value, the higher its uploading priority. # default value is 10 upload_priority=10 # the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a # multi aliases split by comma. empty value means auto set by OS type # default values is empty if_alias_prefix= # if check file duplicate, when set to true, use FastDHT to store file indexes # 1 or yes: need check # 0 or no: do not check # default value is 0 check_file_duplicate=0 # file signature method for check file duplicate ## hash: four 32 bits hash code ## md5: MD5 signature # default value is hash # since V4.01 file_signature_method=hash # namespace for storing file indexes (key-value pairs) # this item must be set when check_file_duplicate is true / on key_namespace=FastDFS # set keep_alive to 1 to enable persistent connection with FastDHT servers # default value is 0 (short connection) keep_alive=0 # you can use "#include filename" (not include double quotes) directive to # load FastDHT server list, when the filename is a relative path such as # pure filename, the base path is the base path of current/this config file. # must set FastDHT server list when check_file_duplicate is true / on # please see INSTALL of FastDHT for detail ##include /home/yuqing/fastdht/conf/fdht_servers.conf # if log to access log # default value is false # since V4.00 use_access_log = false # if rotate the access log every day # default value is false # since V4.00 rotate_access_log = false # rotate access log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.00 access_log_rotate_time=00:00 # if rotate the error log every day # default value is false # since V4.02 rotate_error_log = false # rotate error log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.02 error_log_rotate_time=00:00 # rotate access log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_access_log_size = 0 # rotate error log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_error_log_size = 0 # keep days of the log files # 0 means do not delete old log files # default value is 0 log_file_keep_days = 0 # if skip the invalid record when sync file # default value is false # since V4.02 file_sync_skip_invalid_record=false # if use connection pool # default value is false # since V4.05 use_connection_pool = false # connections whose the idle time exceeds this time will be closed # unit: second # default value is 3600 # since V4.05 connection_pool_max_idle_time = 3600 # use the ip address of this storage server if domain_name is empty, # else this domain name will ocur in the url redirected by the tracker server http.domain_name= # the port of the web server on this storage server http.server_port=8888

ops组配置文件

[root@storage01 storage_conf]# cat storage_ops.conf # is this config file disabled # false for enabled # true for disabled disabled=false # the name of the group this storage server belongs to # # comment or remove this item for fetching from tracker server, # in this case, use_storage_id must set to true in tracker.conf, # and storage_ids.conf must be configed correctly. group_name=ops # bind an address of this host # empty for bind all addresses of this host bind_addr= # if bind an address of this host when connect to other servers # (this storage server as a client) # true for binding the address configed by above parameter: "bind_addr" # false for binding any address of this host client_bind=true # the storage server port port=23001 # connect timeout in seconds # default value is 30s connect_timeout=30 # network timeout in seconds # default value is 30s network_timeout=60 # heart beat interval in seconds heart_beat_interval=30 # disk usage report interval in seconds stat_report_interval=60 # the base path to store data and log files base_path=/data/storage/ops/base # max concurrent connections the server supported # default value is 256 # more max_connections means more memory will be used max_connections=256 # the buff size to recv / send data # this parameter must more than 8KB # default value is 64KB # since V2.00 buff_size = 256KB # accept thread count # default value is 1 # since V4.07 accept_threads=1 # work thread count, should <= max_connections # work thread deal network io # default value is 4 # since V2.00 work_threads=4 # if disk read / write separated ## false for mixed read and write ## true for separated read and write # default value is true # since V2.00 disk_rw_separated = true # disk reader thread count per store base path # for mixed read / write, this parameter can be 0 # default value is 1 # since V2.00 disk_reader_threads = 1 # disk writer thread count per store base path # for mixed read / write, this parameter can be 0 # default value is 1 # since V2.00 disk_writer_threads = 1 # when no entry to sync, try read binlog again after X milliseconds # must > 0, default value is 200ms sync_wait_msec=50 # after sync a file, usleep milliseconds # 0 for sync successively (never call usleep) sync_interval=0 # storage sync start time of a day, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 sync_start_time=00:00 # storage sync end time of a day, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 sync_end_time=23:59 # write to the mark file after sync N files # default value is 500 write_mark_file_freq=500 # path(disk or mount point) count, default value is 1 store_path_count=1 # store_path#, based 0, if store_path0 not exists, it's value is base_path # the paths must be exist store_path0=/data/storage/ops/store #store_path1=/home/yuqing/fastdfs2 # subdir_count * subdir_count directories will be auto created under each # store_path (disk), value can be 1 to 256, default value is 256 subdir_count_per_path=256 # tracker_server can ocur more than once, and tracker_server format is # "host:port", host can be hostname or ip address tracker_server=10.0.0.181:22122 tracker_server=10.0.0.182:22122 #standard log level as syslog, case insensitive, value list: ### emerg for emergency ### alert ### crit for critical ### error ### warn for warning ### notice ### info ### debug log_level=info #unix group name to run this program, #not set (empty) means run by the group of current user run_by_group= #unix username to run this program, #not set (empty) means run by current user run_by_user= # allow_hosts can ocur more than once, host can be hostname or ip address, # "*" (only one asterisk) means match all ip addresses # we can use CIDR ips like 192.168.5.64/26 # and also use range like these: 10.0.1.[0-254] and host[01-08,20-25].domain.com # for example: # allow_hosts=10.0.1.[1-15,20] # allow_hosts=host[01-08,20-25].domain.com # allow_hosts=192.168.5.64/26 allow_hosts=* # the mode of the files distributed to the data path # 0: round robin(default) # 1: random, distributted by hash code file_distribute_path_mode=0 # valid when file_distribute_to_path is set to 0 (round robin), # when the written file count reaches this number, then rotate to next path # default value is 100 file_distribute_rotate_count=100 # call fsync to disk when write big file # 0: never call fsync # other: call fsync when written bytes >= this bytes # default value is 0 (never call fsync) fsync_after_written_bytes=0 # sync log buff to disk every interval seconds # must > 0, default value is 10 seconds sync_log_buff_interval=10 # sync binlog buff / cache to disk every interval seconds # default value is 60 seconds sync_binlog_buff_interval=10 # sync storage stat info to disk every interval seconds # default value is 300 seconds sync_stat_file_interval=300 # thread stack size, should >= 512KB # default value is 512KB thread_stack_size=512KB # the priority as a source server for uploading file. # the lower this value, the higher its uploading priority. # default value is 10 upload_priority=10 # the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a # multi aliases split by comma. empty value means auto set by OS type # default values is empty if_alias_prefix= # if check file duplicate, when set to true, use FastDHT to store file indexes # 1 or yes: need check # 0 or no: do not check # default value is 0 check_file_duplicate=0 # file signature method for check file duplicate ## hash: four 32 bits hash code ## md5: MD5 signature # default value is hash # since V4.01 file_signature_method=hash # namespace for storing file indexes (key-value pairs) # this item must be set when check_file_duplicate is true / on key_namespace=FastDFS # set keep_alive to 1 to enable persistent connection with FastDHT servers # default value is 0 (short connection) keep_alive=0 # you can use "#include filename" (not include double quotes) directive to # load FastDHT server list, when the filename is a relative path such as # pure filename, the base path is the base path of current/this config file. # must set FastDHT server list when check_file_duplicate is true / on # please see INSTALL of FastDHT for detail ##include /home/yuqing/fastdht/conf/fdht_servers.conf # if log to access log # default value is false # since V4.00 use_access_log = false # if rotate the access log every day # default value is false # since V4.00 rotate_access_log = false # rotate access log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.00 access_log_rotate_time=00:00 # if rotate the error log every day # default value is false # since V4.02 rotate_error_log = false # rotate error log time base, time format: Hour:Minute # Hour from 0 to 23, Minute from 0 to 59 # default value is 00:00 # since V4.02 error_log_rotate_time=00:00 # rotate access log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_access_log_size = 0 # rotate error log when the log file exceeds this size # 0 means never rotates log file by log file size # default value is 0 # since V4.02 rotate_error_log_size = 0 # keep days of the log files # 0 means do not delete old log files # default value is 0 log_file_keep_days = 0 # if skip the invalid record when sync file # default value is false # since V4.02 file_sync_skip_invalid_record=false # if use connection pool # default value is false # since V4.05 use_connection_pool = false # connections whose the idle time exceeds this time will be closed # unit: second # default value is 3600 # since V4.05 connection_pool_max_idle_time = 3600 # use the ip address of this storage server if domain_name is empty, # else this domain name will ocur in the url redirected by the tracker server http.domain_name= # the port of the web server on this storage server http.server_port=8888

创建目录

[root@storage01 storage_conf]# mkdir /data/storage/dev/base -p [root@storage01 storage_conf]# mkdir /data/storage/ops/base -p

创建客户数据存放目录(支持多目录)

[root@storage01 storage_conf]# mkdir /data/storage/dev/store -p [root@storage01 storage_conf]# mkdir /data/storage/ops/store -p

启动storage节点服务器

[root@storage03 storage_conf]# /usr/bin/fdfs_storaged /etc/fdfs/storage_conf/storage_dev.conf start [root@storage03 storage_conf]# /usr/bin/fdfs_storaged /etc/fdfs/storage_conf/storage_ops.conf start

[root@storage01 storage_conf]# netstat -lntp|grep fdfs tcp 0 0 0.0.0.0:23000 0.0.0.0:* LISTEN 2367/fdfs_storaged tcp 0 0 0.0.0.0:23001 0.0.0.0:* LISTEN 2371/fdfs_storaged [root@storage01 storage_conf]# ps -ef|grep fdfs root 2367 1 45 01:46 ? 00:01:15 /usr/bin/fdfs_storaged /etc/fdfs/storage_conf/storage_dev.conf start root 2371 1 44 01:46 ? 00:01:08 /usr/bin/fdfs_storaged /etc/fdfs/storage_conf/storage_ops.conf start

客户端测试

准备一个客户端测试,我这里就用storage01服务器作为客户端来测试

[root@storage01 fdfs]# cp client.conf.sample client.conf

修改配置文件

[root@storage01 fdfs]# cat client.conf # connect timeout in seconds # default value is 30s connect_timeout=30 # network timeout in seconds # default value is 30s network_timeout=60 # the base path to store log files base_path=/home/guilin/fastdfs # tracker_server can ocur more than once, and tracker_server format is # "host:port", host can be hostname or ip address tracker_server=10.0.0.181:22122 tracker_server=10.0.0.182:22122 #standard log level as syslog, case insensitive, value list: ### emerg for emergency ### alert ### crit for critical ### error ### warn for warning ### notice ### info ### debug log_level=info # if use connection pool # default value is false # since V4.05 use_connection_pool = false # connections whose the idle time exceeds this time will be closed # unit: second # default value is 3600 # since V4.05 connection_pool_max_idle_time = 3600 # if load FastDFS parameters from tracker server # since V4.05 # default value is false load_fdfs_parameters_from_tracker=false # if use storage ID instead of IP address # same as tracker.conf # valid only when load_fdfs_parameters_from_tracker is false # default value is false # since V4.05 use_storage_id = false # specify storage ids filename, can use relative or absolute path # same as tracker.conf # valid only when load_fdfs_parameters_from_tracker is false # since V4.05 storage_ids_filename = storage_ids.conf #HTTP settings http.tracker_server_port=80 #use "#include" directive to include HTTP other settiongs ##include http.conf

创建客户配置文件要使用的目录

[root@storage01 fdfs]# useradd guilin [root@storage01 fdfs]# mkdir /home/guilin/fastdfs

查看集群的状态

[root@storage01 fdfs]# fdfs_monitor client.conf [2018-09-18 01:59:46] DEBUG - base_path=/home/guilin/fastdfs, connect_timeout=30, network_timeout=60, tracker_server_count=2, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0 server_count=2, server_index=1 tracker server is 10.0.0.182:22122 group count: 2 Group 1: group name = dev disk total space = 51175 MB disk free space = 49054 MB trunk free space = 0 MB storage server count = 3 active server count = 1 storage server port = 23000 storage HTTP port = 8888 store path count = 1 subdir count per path = 256 current write server index = 0 current trunk file id = 0 Storage 1: id = 172.16.1.190 ip_addr = 172.16.1.190 (storage01) ACTIVE http domain = version = 5.08 join time = 2018-09-18 01:46:01 up time = 2018-09-18 01:46:01 total storage = 51175 MB free storage = 49054 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8888 current_write_path = 0 source storage id = if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 2 total_upload_count = 0 success_upload_count = 0 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 0 success_upload_bytes = 0 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 0 success_file_open_count = 0 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 0 success_file_write_count = 0 last_heart_beat_time = 2018-09-18 01:59:19 last_source_update = 1970-01-01 08:00:00 last_sync_update = 1970-01-01 08:00:00 last_synced_timestamp = 1970-01-01 08:00:00 Storage 2: id = 172.16.1.191 ip_addr = 172.16.1.191 WAIT_SYNC http domain = version = 5.08 join time = 2018-09-18 01:46:01 up time = 2018-09-18 01:46:01 total storage = 51175 MB free storage = 49058 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8888 current_write_path = 0 source storage id = 172.16.1.190 if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 2 total_upload_count = 0 success_upload_count = 0 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 0 success_upload_bytes = 0 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 0 success_file_open_count = 0 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 0 success_file_write_count = 0 last_heart_beat_time = 2018-09-18 01:59:33 last_source_update = 1970-01-01 08:00:00 last_sync_update = 1970-01-01 08:00:00 last_synced_timestamp = 1970-01-01 08:00:00 Storage 3: id = 172.16.1.192 ip_addr = 172.16.1.192 WAIT_SYNC http domain = version = 5.08 join time = 2018-09-18 01:46:01 up time = 2018-09-18 01:46:01 total storage = 51175 MB free storage = 49057 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23000 storage_http_port = 8888 current_write_path = 0 source storage id = 172.16.1.190 if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 2 total_upload_count = 0 success_upload_count = 0 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 0 success_upload_bytes = 0 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 0 success_file_open_count = 0 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 0 success_file_write_count = 0 last_heart_beat_time = 2018-09-18 01:59:32 last_source_update = 1970-01-01 08:00:00 last_sync_update = 1970-01-01 08:00:00 last_synced_timestamp = 1970-01-01 08:00:00 Group 2: group name = ops disk total space = 51175 MB disk free space = 49054 MB trunk free space = 0 MB storage server count = 3 active server count = 1 storage server port = 23001 storage HTTP port = 8888 store path count = 1 subdir count per path = 256 current write server index = 0 current trunk file id = 0 Storage 1: id = 10.0.0.190 ip_addr = 10.0.0.190 (storage01) ACTIVE http domain = version = 5.08 join time = 2018-09-18 01:46:11 up time = 2018-09-18 01:46:11 total storage = 51175 MB free storage = 49054 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23001 storage_http_port = 8888 current_write_path = 0 source storage id = if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 2 total_upload_count = 0 success_upload_count = 0 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 0 success_upload_bytes = 0 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 0 success_file_open_count = 0 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 0 success_file_write_count = 0 last_heart_beat_time = 2018-09-18 01:59:27 last_source_update = 1970-01-01 08:00:00 last_sync_update = 1970-01-01 08:00:00 last_synced_timestamp = 1970-01-01 08:00:00 Storage 2: id = 10.0.0.191 ip_addr = 10.0.0.191 WAIT_SYNC http domain = version = 5.08 join time = 2018-09-18 01:46:11 up time = 2018-09-18 01:46:11 total storage = 51175 MB free storage = 49058 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23001 storage_http_port = 8888 current_write_path = 0 source storage id = 10.0.0.190 if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 2 total_upload_count = 0 success_upload_count = 0 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 0 success_upload_bytes = 0 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 0 success_file_open_count = 0 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 0 success_file_write_count = 0 last_heart_beat_time = 2018-09-18 01:59:34 last_source_update = 1970-01-01 08:00:00 last_sync_update = 1970-01-01 08:00:00 last_synced_timestamp = 1970-01-01 08:00:00 Storage 3: id = 10.0.0.192 ip_addr = 10.0.0.192 WAIT_SYNC http domain = version = 5.08 join time = 2018-09-18 01:46:11 up time = 2018-09-18 01:46:11 total storage = 51175 MB free storage = 49057 MB upload priority = 10 store_path_count = 1 subdir_count_per_path = 256 storage_port = 23001 storage_http_port = 8888 current_write_path = 0 source storage id = 10.0.0.190 if_trunk_server = 0 connection.alloc_count = 256 connection.current_count = 0 connection.max_count = 2 total_upload_count = 0 success_upload_count = 0 total_append_count = 0 success_append_count = 0 total_modify_count = 0 success_modify_count = 0 total_truncate_count = 0 success_truncate_count = 0 total_set_meta_count = 0 success_set_meta_count = 0 total_delete_count = 0 success_delete_count = 0 total_download_count = 0 success_download_count = 0 total_get_meta_count = 0 success_get_meta_count = 0 total_create_link_count = 0 success_create_link_count = 0 total_delete_link_count = 0 success_delete_link_count = 0 total_upload_bytes = 0 success_upload_bytes = 0 total_append_bytes = 0 success_append_bytes = 0 total_modify_bytes = 0 success_modify_bytes = 0 stotal_download_bytes = 0 success_download_bytes = 0 total_sync_in_bytes = 0 success_sync_in_bytes = 0 total_sync_out_bytes = 0 success_sync_out_bytes = 0 total_file_open_count = 0 success_file_open_count = 0 total_file_read_count = 0 success_file_read_count = 0 total_file_write_count = 0 success_file_write_count = 0 last_heart_beat_time = 2018-09-18 01:59:35 last_source_update = 1970-01-01 08:00:00 last_sync_update = 1970-01-01 08:00:00 last_synced_timestamp = 1970-01-01 08:00:00

监控

监控项:

storage server count = 3 active server count = 3

每个节点的状态:

Storage 1: id = 10.0.0.190 ip_addr = 10.0.0.190 ACTIVE

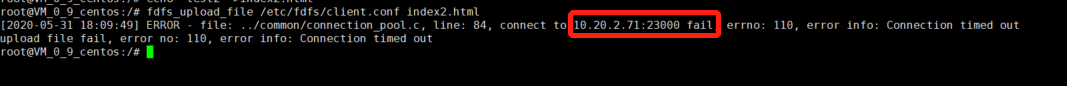

问题

STORAGE SERVER的状态通常有七种:

# FDFS_STORAGE_STATUS:INIT :初始化,尚未得到同步已有数据的源服务器 # FDFS_STORAGE_STATUS:WAIT_SYNC :等待同步,已得到同步已有数据的源服务器 # FDFS_STORAGE_STATUS:SYNCING :同步中 # FDFS_STORAGE_STATUS:DELETED :已删除,该服务器从本组中摘除 # FDFS_STORAGE_STATUS:OFFLINE :离线 # FDFS_STORAGE_STATUS:ONLINE :在线,尚不能提供服务 # FDFS_STORAGE_STATUS:ACTIVE :在线,可以提供服务

正常状态必须是ACTIVE,如果运行以下命令:

fdfs_monitor /etc/fdfs/client.conf

状态变正常了。

测试文件系统

上传文件

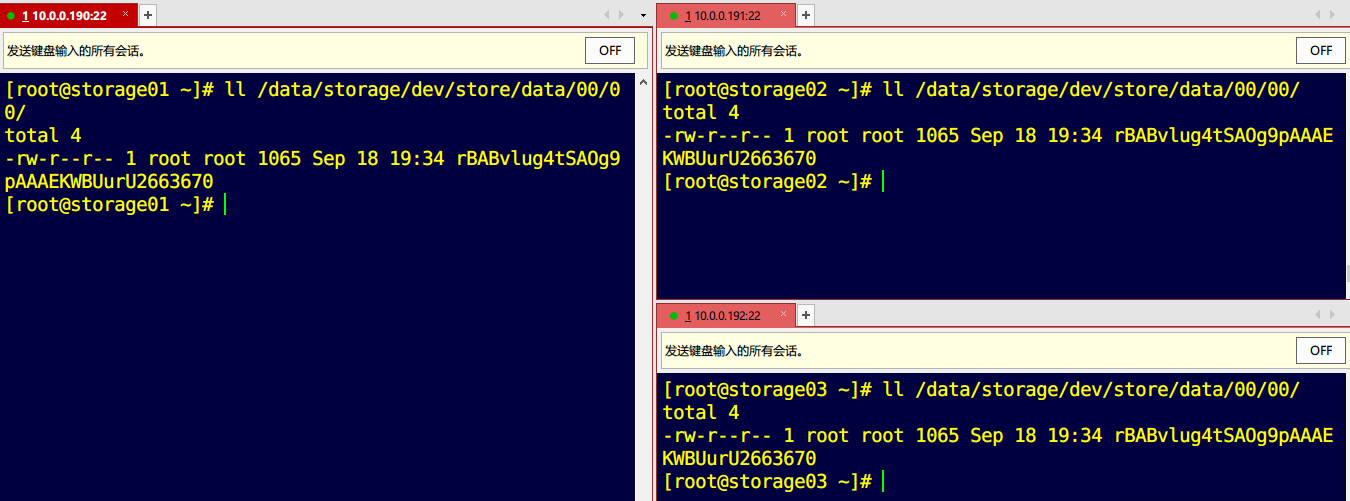

[root@storage01 ~]# fdfs_upload_file /etc/fdfs/client.conf /etc/passwd dev/M00/00/00/rBABvlug4tSAOg9pAAAEKWBUurU2663670

上传后文件返回dev/M00/00/00/rBABvlug4tSAOg9pAAAEKWBUurU2663670

返回的路径含义是M00是一个虚拟磁盘,我们可以将物理设备挂载到dev和ops

例如将/dev/sdb挂载到dev 把/dev/sdc挂载到ops

/data/storage/dev/store/data/00/00/ == dev/M00/00/00/

[root@storage01 ~]# ll /data/storage/dev/store/data/00/00 total 4 -rw-r--r-- 1 root root 1065 Sep 18 19:34 rBABvlug4tSAOg9pAAAEKWBUurU2663670

如图所示,我们能看到在所有的节点服务器上都存放了刚刚上传的数据,数据上传后文件名会发生变化,内容不变

我们可以将文件下载后检查

[root@storage01 ~]# fdfs_download_file /etc/fdfs/client.conf dev/M00/00/00/rBABvlug4tSAOg9pAAAEKWBUurU2663670 [root@storage01 ~]# ls anaconda-ks.cfg rBABvlug4tSAOg9pAAAEKWBUurU2663670

[root@storage01 ~]# cat rBABvlug4tSAOg9pAAAEKWBUurU2663670 root:x:0:0:root:/root:/bin/bash bin:x:1:1:bin:/bin:/sbin/nologin daemon:x:2:2:daemon:/sbin:/sbin/nologin adm:x:3:4:adm:/var/adm:/sbin/nologin lp:x:4:7:lp:/var/spool/lpd:/sbin/nologin sync:x:5:0:sync:/sbin:/bin/sync shutdown:x:6:0:shutdown:/sbin:/sbin/shutdown halt:x:7:0:halt:/sbin:/sbin/halt mail:x:8:12:mail:/var/spool/mail:/sbin/nologin operator:x:11:0:operator:/root:/sbin/nologin games:x:12:100:games:/usr/games:/sbin/nologin ftp:x:14:50:FTP User:/var/ftp:/sbin/nologin nobody:x:99:99:Nobody:/:/sbin/nologin systemd-network:x:192:192:systemd Network Management:/:/sbin/nologin dbus:x:81:81:System message bus:/:/sbin/nologin polkitd:x:999:998:User for polkitd:/:/sbin/nologin tss:x:59:59:Account used by the trousers package to sandbox the tcsd daemon:/dev/null:/sbin/nologin abrt:x:173:173::/etc/abrt:/sbin/nologin sshd:x:74:74:Privilege-separated SSH:/var/empty/sshd:/sbin/nologin postfix:x:89:89::/var/spool/postfix:/sbin/nologin chrony:x:998:996::/var/lib/chrony:/sbin/nologin ntp:x:38:38::/etc/ntp:/sbin/nologin guilin:x:1000:1000::/home/guilin:/bin/bash

[root@storage01 ~]# md5sum /etc/passwd d547c843a254e3006930956843470814 /etc/passwd [root@storage01 ~]# md5sum rBABvlug4tSAOg9pAAAEKWBUurU2663670 d547c843a254e3006930956843470814 rBABvlug4tSAOg9pAAAEKWBUurU2663670

可以看出文件的内容与我们上传的/etc/passwd内容一致,包括MD5也是一致,由此可以看出我们的数据会分布在各个节点上保存。

同时我们也可以在上传时指定组,节点服务器中可能会有多个组,不同的组使用了不同的端口,我们只需在上传时指定任意一台节点服务器的IP和端口即可

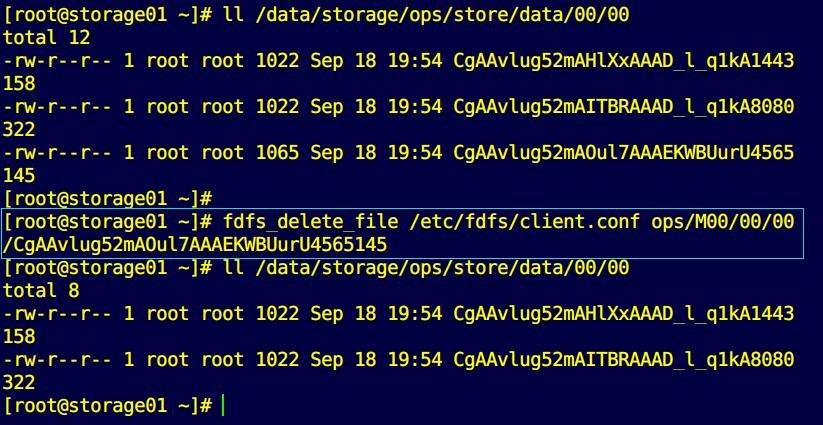

[root@storage01 ~]# fdfs_upload_file /etc/fdfs/client.conf /etc/passwd 172.16.1.190:23001 ops/M00/00/00/CgAAvlug52mAOul7AAAEKWBUurU4565145 [root@storage01 ~]# ll /data/storage/ops/store/data/00/00 total 12 -rw-r--r-- 1 root root 1022 Sep 18 19:54 CgAAvlug52mAHlXxAAAD_l_q1kA1443158 -rw-r--r-- 1 root root 1022 Sep 18 19:54 CgAAvlug52mAITBRAAAD_l_q1kA8080322 -rw-r--r-- 1 root root 1065 Sep 18 19:54 CgAAvlug52mAOul7AAAEKWBUurU4565145

删除文件

[root@storage01 ~]# fdfs_delete_file /etc/fdfs/client.conf ops/M00/00/00/CgAAvlug52mAOul7AAAEKWBUurU4565145 [root@storage01 ~]# ll /data/storage/ops/store/data/00/00 total 8 -rw-r--r-- 1 root root 1022 Sep 18 19:54 CgAAvlug52mAHlXxAAAD_l_q1kA1443158 -rw-r--r-- 1 root root 1022 Sep 18 19:54 CgAAvlug52mAITBRAAAD_l_q1kA8080322

所有节点中保存的文件都将被删除。

追加上传

准备环境

[root@storage01 ~]# echo 1001 >guilin01.txt [root@storage01 ~]# echo 2002 >guilin02.txt [root@storage01 ~]# ls guilin01.txt guilin02.txt

[root@storage01 ~]# fdfs_upload_appender /etc/fdfs/client.conf guilin01.txt dev/M00/00/00/rBABv1ug7feEW8dOAAAAAIWphcQ520.txt [root@storage01 ~]# fdfs_download_file /etc/fdfs/client.conf dev/M00/00/00/rBABv1ug7feEW8dOAAAAAIWphcQ520.txt [root@storage01 ~]# ls guilin01.txt guilin02.txt rBABv1ug7feEW8dOAAAAAIWphcQ520.txt [root@storage01 ~]# cat rBABv1ug7feEW8dOAAAAAIWphcQ520.txt 1001

成功上传了一个文件

现在往刚上传的文件追加内容

[root@storage01 ~]# fdfs_append_file /etc/fdfs/client.conf dev/M00/00/00/rBABv1ug7feEW8dOAAAAAIWphcQ520.txt guilin02.txt

现在下载下来检查,下载前我们先删除上次下载的内容

[root@storage01 ~]# rm rBABv1ug7feEW8dOAAAAAIWphcQ520.txt [root@storage01 ~]# ls guilin01.txt guilin02.txt

[root@storage01 ~]# fdfs_download_file /etc/fdfs/client.conf dev/M00/00/00/rBABv1ug7feEW8dOAAAAAIWphcQ520.txt [root@storage01 ~]# ls guilin01.txt guilin02.txt rBABv1ug7feEW8dOAAAAAIWphcQ520.txt [root@storage01 ~]# cat rBABv1ug7feEW8dOAAAAAIWphcQ520.txt 1001 2002

可见追加上传是成功的

查看文件属性(这里我感觉是错的,哪位达人指点一下)

[root@storage01 ~]# fdfs_monitor /etc/fdfs/client.conf dev/M00/00/00/rBABv1ug7feEW8dOAAAAAIWphcQ520.txt [2018-09-18 20:34:39] DEBUG - base_path=/home/guilin/fastdfs, connect_timeout=30, network_timeout=60, tracker_server_count=2, anti_steal_token=0, anti_steal_secret_key length=0, use_connection_pool=0, g_connection_pool_max_idle_time=3600s, use_storage_id=0, storage server id count: 0 server_count=2, server_index=0 tracker server is 172.16.1.181:22122 Invalid command dev/M00/00/00/rBABv1ug7feEW8dOAAAAAIWphcQ520.txt Usage: fdfs_monitor <config_file> [-h <tracker_server>] [list|delete|set_trunk_server <group_name> [storage_id]]

配置图片(视频)服务器

安装WEB基础环境

yum install -y pcre pcre-devel openssl openssl-devel gcc gcc-c++ autoconf automake make

安装Nginx

useradd www -M -s /sbin/nologin tar zxf nginx-1.10.3.tar.gz cd nginx-1.10.3 ./configure --prefix=/data/webserver/nginx-1.10.3 --user=www --group=www --with-http_ssl_module --with-http_stub_status_module --add-module=/data/tools/fastdfs-nginx-module/src/ make make install

复制文件到FastDFS系统中

[root@storage01 nginx-1.13.7]# cd /data/tools/fastdfs-nginx-module/src/ [root@storage01 src]# ls common.c common.h config mod_fastdfs.conf ngx_http_fastdfs_module.c [root@storage01 src]# cp mod_fastdfs.conf /etc/fdfs/ [root@storage01 src]# cd /data/tools/fastdfs-5.08/conf/ [root@storage01 conf]# cp anti-steal.jpg http.conf mime.types /etc/fdfs/

修改模块配置文件

[root@storage01 fdfs]# cat mod_fastdfs.conf

# connect timeout in seconds

# default value is 30s

connect_timeout=2

# network recv and send timeout in seconds

# default value is 30s

network_timeout=30

# the base path to store log files

base_path=/tmp

# if load FastDFS parameters from tracker server

# since V1.12

# default value is false

load_fdfs_parameters_from_tracker=true

# storage sync file max delay seconds

# same as tracker.conf

# valid only when load_fdfs_parameters_from_tracker is false

# since V1.12

# default value is 86400 seconds (one day)

storage_sync_file_max_delay = 86400

# if use storage ID instead of IP address

# same as tracker.conf

# valid only when load_fdfs_parameters_from_tracker is false

# default value is false

# since V1.13

use_storage_id = false

# specify storage ids filename, can use relative or absolute path

# same as tracker.conf

# valid only when load_fdfs_parameters_from_tracker is false

# since V1.13

storage_ids_filename = storage_ids.conf

# FastDFS tracker_server can ocur more than once, and tracker_server format is

# "host:port", host can be hostname or ip address

# valid only when load_fdfs_parameters_from_tracker is true

tracker_server=172.16.1.181:22122

tracker_server=172.16.1.182:22122

# the port of the local storage server

# the default value is 23000

storage_server_port=23000

# the group name of the local storage server

group_name=group1

# if the url / uri including the group name

# set to false when uri like /M00/00/00/xxx

# set to true when uri like ${group_name}/M00/00/00/xxx, such as group1/M00/xxx

# default value is false

url_have_group_name = true

# path(disk or mount point) count, default value is 1

# must same as storage.conf

store_path_count=1

# store_path#, based 0, if store_path0 not exists, it's value is base_path

# the paths must be exist

# must same as storage.conf

store_path0=/home/guilin/fastdfs

#store_path1=/home/yuqing/fastdfs1

# standard log level as syslog, case insensitive, value list:

### emerg for emergency

### alert

### crit for critical

### error

### warn for warning

### notice

### info

### debug

log_level=info

# set the log filename, such as /usr/local/apache2/logs/mod_fastdfs.log

# empty for output to stderr (apache and nginx error_log file)

log_filename=

# response mode when the file not exist in the local file system

## proxy: get the content from other storage server, then send to client

## redirect: redirect to the original storage server (HTTP Header is Location)

response_mode=proxy

# the NIC alias prefix, such as eth in Linux, you can see it by ifconfig -a

# multi aliases split by comma. empty value means auto set by OS type

# this paramter used to get all ip address of the local host

# default values is empty

if_alias_prefix=

# use "#include" directive to include HTTP config file

# NOTE: #include is an include directive, do NOT remove the # before include

#include http.conf

# if support flv

# default value is false

# since v1.15

flv_support = true

# flv file extension name

# default value is flv

# since v1.15

flv_extension = flv

# set the group count

# set to none zero to support multi-group on this storage server

# set to 0 for single group only

# groups settings section as [group1], [group2], ..., [groupN]

# default value is 0

# since v1.14

group_count = 2

# group settings for group #1

# since v1.14

# when support multi-group on this storage server, uncomment following section

[group1]

group_name=dev

storage_server_port=23000

store_path_count=1

store_path0=/data/storage/dev/store

[group2]

group_name=ops

storage_server_port=23001

store_path_count=1

store_path0=/data/storage/ops/store

修改nginx配置文件

[root@storage01 fdfs]# cd /data/webserver/ [root@storage01 webserver]# ls nginx-1.10.3 [root@storage01 webserver]# ln -s nginx-1.10.3/ nginx [root@storage01 webserver]# ll total 0 lrwxrwxrwx 1 root root 13 Sep 19 00:15 nginx -> nginx-1.10.3/ drwxr-xr-x 6 root root 54 Sep 18 23:34 nginx-1.10.3

在主配置文件中加入:include vhosts/fastdfs.conf;

创建虚拟主机配置文件

[root@storage01 vhosts]# vim fastdfs.conf

server {

listen 80;

server_name dev.img.linuxbaodian.com;

location /dev/M00 {

root /data/storage/dev/store/data;

ngx_fastdfs_module;

}

}

server {

listen 80;

server_name dba.img.linuxbaodian.com;

location /ops/M00 {

root /data/storage/ops/store/data;

ngx_fastdfs_module;

}

}

解析域名

启动web服务

[root@storage01 nginx]# sbin/nginx -t ngx_http_fastdfs_set pid=8763 ngx_http_fastdfs_set pid=8763 nginx: the configuration file /data/webserver/nginx-1.10.3/conf/nginx.conf syntax is ok nginx: configuration file /data/webserver/nginx-1.10.3/conf/nginx.conf test is successful [root@storage01 nginx]# sbin/nginx ngx_http_fastdfs_set pid=8764 ngx_http_fastdfs_set pid=8764

上传测试图片

[root@storage01 data]# fdfs_upload_file /etc/fdfs/client.conf 001.png dev/M00/00/00/rBABwFuhKl-ADCZnAABIISqm48Q954.png [root@storage01 data]# fdfs_upload_file /etc/fdfs/client.conf 002.png 172.16.1.190:23001 ops/M00/00/00/CgAAvluhKoGAJBAAAAzpdXz2GBk427.png

测试访问

[root@storage01 data]# ll storage/ops/store/data/00/00/CgAAvluhKoGAJBAAAAzpdXz2GBk427.png -rw-r--r-- 1 root root 846197 Sep 19 00:40 storage/ops/store/data/00/00/CgAAvluhKoGAJBAAAAzpdXz2GBk427.png [root@storage01 data]# ll storage/dev/store/data/00/00/rBABwFuhKl-ADCZnAABIISqm48Q954.png -rw-r--r-- 1 root root 18465 Sep 19 00:39 storage/dev/store/data/00/00/rBABwFuhKl-ADCZnAABIISqm48Q954.png

访问:http://dev.img.linuxbaodian.com/dev/M00/00/00/rBABwFuhKl-ADCZnAABIISqm48Q954.png

访问http://ops.img.linuxbaodian.com/ops/M00/00/00/CgAAvluhKoGAJBAAAAzpdXz2GBk427.png

Asynq任务框架

Asynq任务框架 MCP智能体开发实战

MCP智能体开发实战 WEB架构

WEB架构 安全监控体系

安全监控体系