全局配置

除了可以通过 annotations 对指定的 Ingress 进行定制之外,我们还可以配置 ingress-nginx 的全局配置,在控制器启动参数中通过标志 --configmap 指定了一个全局的 ConfigMap 对象,我们可以将全局的一些配置直接定义在该对象中即可:

containers:

- args:

- /nginx-ingress-controller

- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller

......比如这里我们用于全局配置的 ConfigMap 名为 ingress-nginx-controller:

kubectl get configmap -n ingress-nginx

NAME DATA AGE

ingress-nginx-controller 1 5d2h比如我们可以添加如下所示的一些常用配置:

kubectl edit configmap ingress-nginx-controller -n ingress-nginx

apiVersion: v1

data:

allow-snippet-annotations: "true"

client-header-buffer-size: 32k # 注意不是下划线

client-max-body-size: 5m

use-gzip: "true"

gzip-level: "7"

large-client-header-buffers: 4 32k

proxy-connect-timeout: 11s

proxy-read-timeout: 12s

keep-alive: "75" # 启用keep-alive,连接复用,提高QPS

keep-alive-requests: "100"

upstream-keepalive-connections: "10000"

upstream-keepalive-requests: "100"

upstream-keepalive-timeout: "60"

disable-ipv6: "true"

disable-ipv6-dns: "true"

max-worker-connections: "65535"

max-worker-open-files: "10240"

kind: ConfigMap

......修改完成后 Nginx 配置会自动重载生效,我们可以查看 nginx.conf 配置文件进行验证:

kubectl exec -it ingress-nginx-controller-gc582 -n ingress-nginx -- cat /etc/nginx/nginx.conf |grep large_client_header_buffers

large_client_header_buffers 4 32k;由于我们这里是 Helm Chart 安装的,为了保证重新部署后配置还在,我们同样需要通过 Values 进行全局配置:

# ci/daemonset-prod.yaml

controller:

config:

allow-snippet-annotations: "true"

client-header-buffer-size: 32k # 注意不是下划线

client-max-body-size: 5m

use-gzip: "true"

gzip-level: "7"

large-client-header-buffers: 4 32k

proxy-connect-timeout: 11s

proxy-read-timeout: 12s

keep-alive: "75" # 启用keep-alive,连接复用,提高QPS

keep-alive-requests: "100"

upstream-keepalive-connections: "10000"

upstream-keepalive-requests: "100"

upstream-keepalive-timeout: "60"

disable-ipv6: "true"

disable-ipv6-dns: "true"

max-worker-connections: "65535"

max-worker-open-files: "10240"

# 其他省略此外往往我们还需要对 ingress-nginx 部署的节点进行性能优化,修改一些内核参数,使得适配 Nginx 的使用场景,一般我们是直接去修改节点上的内核参数,为了能够统一管理,我们可以使用 initContainers 来进行配置:

initContainers:

- command:

- /bin/sh

- -c

- |

mount -o remount rw /proc/sys

sysctl -w net.core.somaxconn=65535 # 具体的配置视具体情况而定

sysctl -w net.ipv4.tcp_tw_reuse=1

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

sysctl -w fs.file-max=1048576

sysctl -w fs.inotify.max_user_instances=16384

sysctl -w fs.inotify.max_user_watches=524288

sysctl -w fs.inotify.max_queued_events=16384

image: busybox

imagePullPolicy: IfNotPresent

name: init-sysctl

securityContext:

capabilities:

add:

- SYS_ADMIN

drop:

- ALL

......由于我们这里使用的是 Helm Chart 安装的 ingress-nginx,同样只需要去配置 Values 值即可,模板中提供了对 initContainers 的支持,配置如下所示:

controller:

# 其他省略,配置 initContainers

extraInitContainers:

- name: init-sysctl

image: busybox

securityContext:

capabilities:

add:

- SYS_ADMIN

drop:

- ALL

command:

- /bin/sh

- -c

- |

mount -o remount rw /proc/sys

sysctl -w net.core.somaxconn=65535 # socket监听的backlog上限

sysctl -w net.ipv4.tcp_tw_reuse=1 # 开启重用,允许将 TIME-WAIT sockets 重新用于新的TCP连接

sysctl -w net.ipv4.ip_local_port_range="1024 65535"

sysctl -w fs.file-max=1048576

sysctl -w fs.inotify.max_user_instances=16384

sysctl -w fs.inotify.max_user_watches=524288

sysctl -w fs.inotify.max_queued_events=16384同样重新部署即可:

helm upgrade --install ingress-nginx . -f ./ci/daemonset-prod.yaml --namespace ingress-nginx部署完成后通过 initContainers 就可以修改节点内核参数了,生产环境建议对节点内核参数进行相应的优化。

竹影清风阁

竹影清风阁

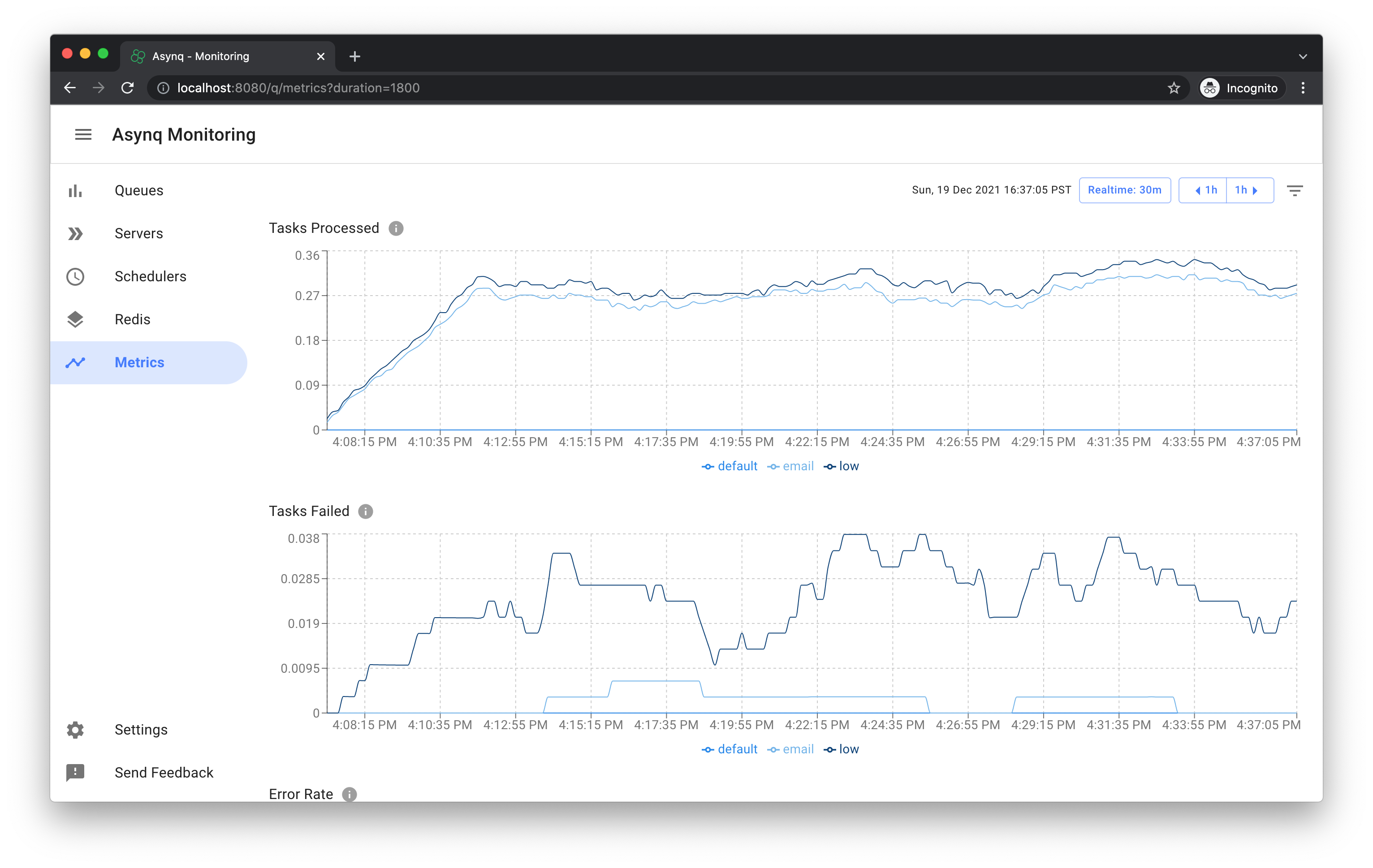

Asynq任务框架

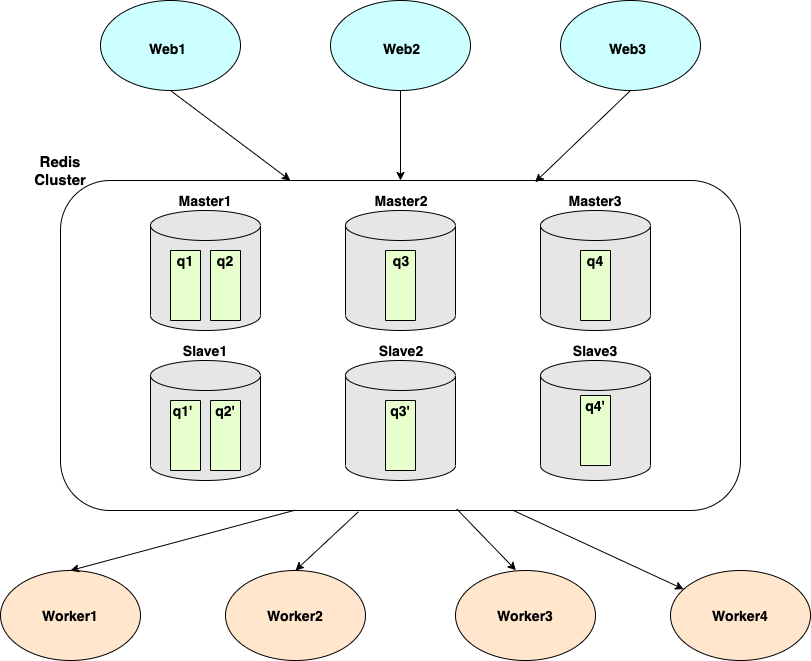

Asynq任务框架 WEB架构

WEB架构 安全监控体系

安全监控体系 集群架构

集群架构